什么是线性回归和线性模型?他们的关系是什么?如何计算和评估线性回归模型?如何在R中实现?

线性回归和线性模型

首先看下wiki给出的解释:

In statistics, linear regression is a linear approach to modeling the relationship between a scalar response (or dependent variable) and one or more explanatory variables (or independent variables). The case of one explanatory variable is called simple linear regression. For more than one explanatory variable, the process is called multiple linear regression

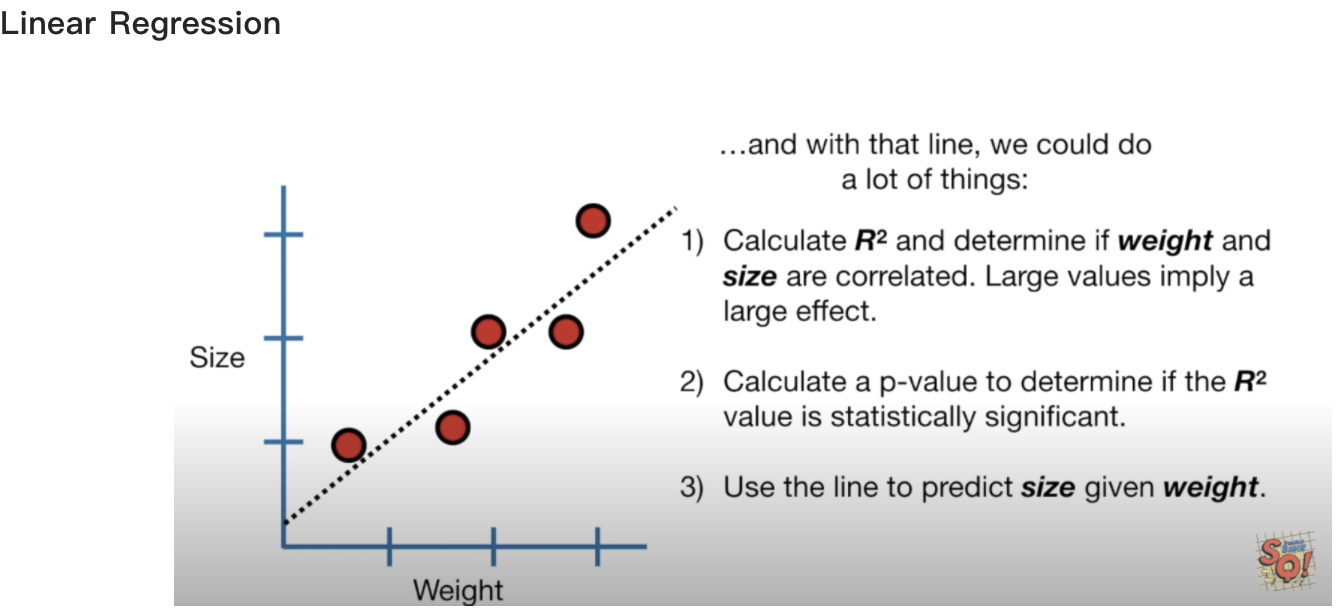

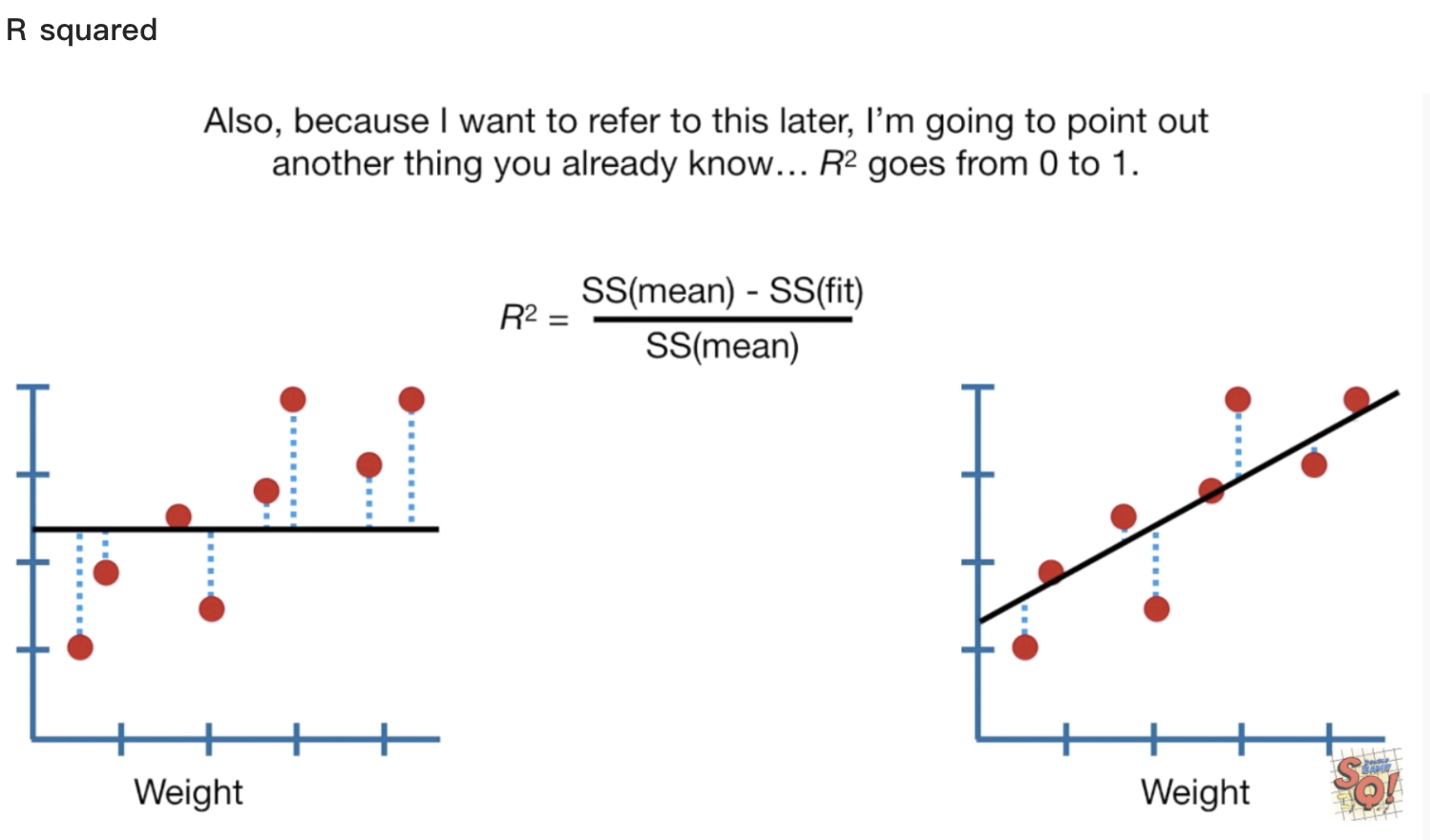

线性回归即用来寻找变量间的线性关系,属于线性模型的一个分支,通常使用最小二乘法(least squared method)寻找

最佳模型,并用R suqared( R2 )评估模型的拟合程度,R2越大表示模型拟合效果越好。在R中以lm()函数实现,代码公式为 lm(dependent varaible ~ independent varaibles, data .

p-value矫正: Bonferroni Correction和Benjamini and Hochberg method

Bonferroni Correction是p-value的一个矫正方法,相对严格;FDR是另一种矫正p-value的方法,而Benjamini and Hochberg method(即BH)是FDR的一个计算方法。

We know P-value threshold is set artificially, the samller p-value represents the lower false positive for the result, but not mean it is absolutly true. For example, if the P-value equals 0.05, 10,000 tests are done, the number of false positive results will be 0.05*10,000 = 500. The false positive results will be enlarged with the incresasing the number of tests . Therefor, we need to introduce multiple tests for correction to reduce the number of false positive results.

There are two main correction methods used:

1) Bonferroni Correction

The Bonferroni method is a simplest and most brutally effective method of correction, which rejects all the possibilities of false pofitive results, and eliminates them by correcting the threshold of the P value.

The formula for Bonferroni method is p(1/n), where p is the original P value threshold, and n is the total number of tests. If the original P value is is 0.05, and the number of test is 10,000, then the threshold after Bonferroni correction is equal to 0.05/10,000 = 0.000005, in this case, the number of false positive results in 10,000 test is 10,000 0.000005 = 0.5, less than 1.

But Bonferroni correction is too stringent, it will cause not only false positives are rejected by corrected threshold, but mant positives are also rejected.

2) FDR( False Discovery Rate)

FDR corrects for p-values in a relatively gentle wat compared to Bonferroni. It attempts to get a balance between false positives and false negatives, keeping the false/true positive ratio within a certain range. For example, if we set a threshold of 0.05 for 10,000 tests,the probabilty of false positives remains within 0.05, which is called FDR<0.05.

So how to calculate FDR from p value, there are several estimation models. The most used is Benjamini and Hochberg method, also known as BH method.

BH method requires the results of total m tests to be ranked in descending order, k is the rank of p value in one of the results.

find the maximum k value that meets the original threshold α, satisfy P(k) <= αk/m, consider the ranking to be significanand different for all tests from 1 to k, and calculate the corresponding q value as q=p(m/k).

参考资料:

statquest线性回归视频 : https://www.youtube.com/playlist?list=PLblh5JKOoLUIzaEkCLIUxQFjPIlapw8nU